Our community is always looking to build new features and enhancements to meet the ever changing needs of today’s data and AI ecosystem. As such, we are glad to announce the release of Gravitino 0.5.0, which features Spark engine support, non-tabular data management, messaging data support, and a brand new Python Client. Make sure to keep reading to learn what we’ve done and check out some usage examples.

Core features and enhancements

Apache Spark connector

With our new Spark connector support, we’ll allow seamless integration with one of the most widely used data processing frameworks. Users can now read and write metadata directly from Gravitino, making the management of large-scale data processing pipelines more convenient and unified. If you’re already a heavy Spark user, this will feel natural to plug into your stack compared to before, where we mainly supported Trino. You can refer to the Spark connector to get started and see the specs.

Support for non-tabular data management

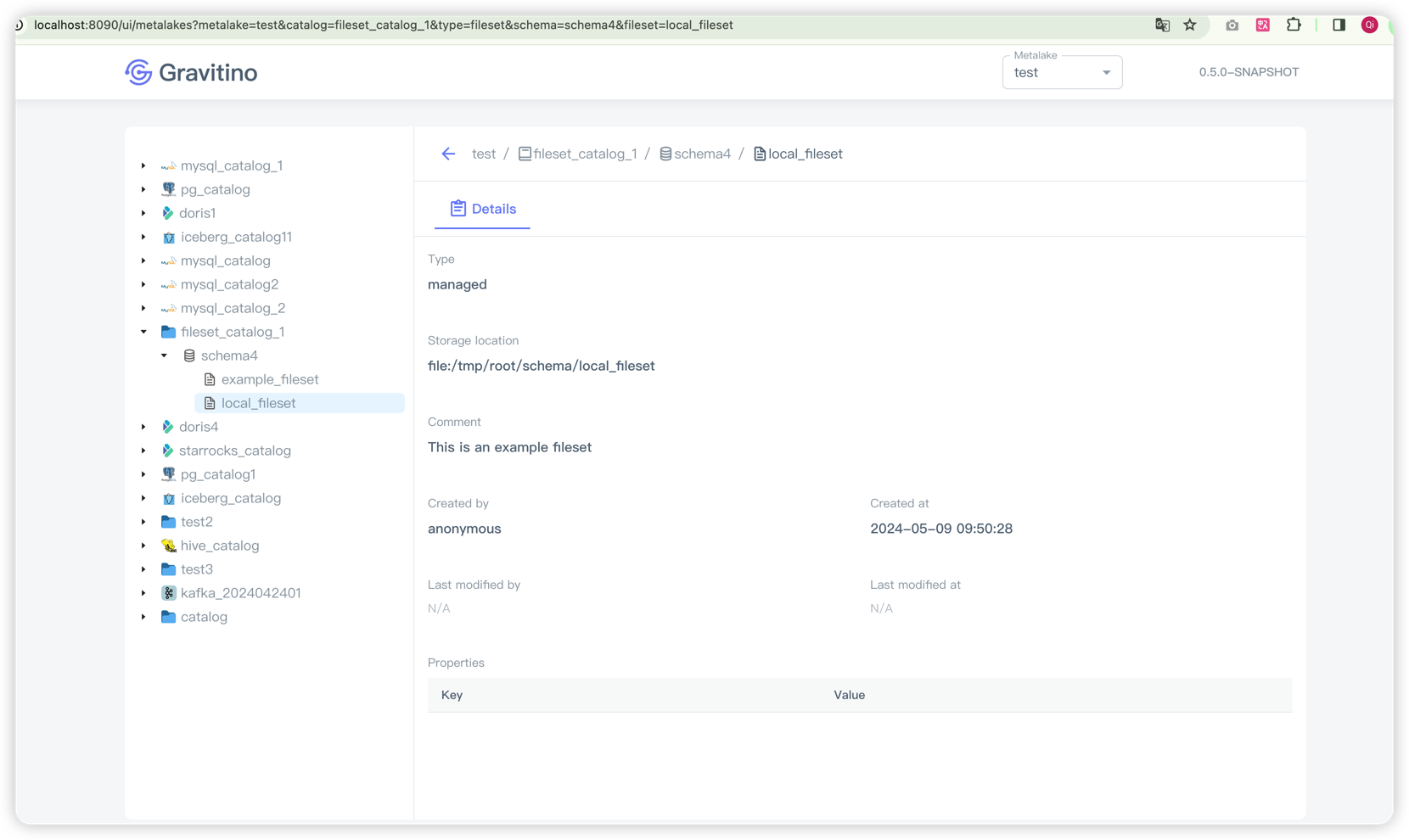

When we group files together as collections as a means of organizing data, we are essentially using “Filesets”. Compared to relational data models like databases, and tables, filesets are used to manage non-tabular data that often does not fit neatly into traditional row-and-column structures of tabular data. Examples of this are unstructured data types like images, documents, audio and video files.

Filesets are useful because they provide a level of abstraction over the storage systems, making it possible to leverage metadata to manage the files, much like a traditional database would do with records in a table. This abstraction brings possibilities like life cycle management, security permissions, and other metadata-level management such as schema or partition information to non-tabular data.

Many modern data infrastructures leverage these formats for data processing, especially with the surge of AI and ML. Their models and data management systems will usually need to use both tabular and non-tabular data, so managing both with a single application can save a lot of headaches for engineers. These difficulties become harder at scale when it comes to data operations, enforcing policies, and dealing with complex storage architectures.

Now that Gravitino supports Fileset features, managing non-tabular data on storage such as HDFS, S3, and other Hadoop-compatible systems is simpler and more integrated. This means you can manage permissions, track usage, and oversee the end-to-end lifecycle of all your data, no matter where the data physically resides. (Issue #1241, refer to Fileset catalog for more details).

Here is a brief example of how to use Fileset in Gravitino.

- Shell

- Java

curl -X POST -H "Accept: application/vnd.gravitino.v1+json" \

-H "Content-Type: application/json" -d '{

"name": "local_fileset",

"comment": "This is an local fileset",

"type": "MANAGED",

"storageLocation": "file:/tmp/root/schema/local_fileset",

"properties": {}

}' http://localhost:8090/api/metalakes/metalake/catalogs/fileset_catalog_1/schemas/schema4/filesets

GravitinoClient gravitinoClient = GravitinoClient

.builder("http://127.0.0.1:8090")

.withMetalake("metalake")

.build();

Catalog catalog = gravitinoClient.loadCatalog(NameIdentifier.of("metalake", "catalog"));

FilesetCatalog filesetCatalog = catalog.asFilesetCatalog();

Map<String, String> propertiesMap = ImmutableMap.<String, String>builder()

.build();

filesetCatalog.createFileset(

NameIdentifier.of("metalake", "fileset_catalog_1", "schema4", "local_fileset"),

"This is an local fileset",

Fileset.Type.MANAGED,

"file:/tmp/root/schema/local_fileset",

propertiesMap,

);

After creating a Fileset catalog, we can use Gravitino Virtual Filesystem to manage the data using Fileset virtual path:

- Java

- Spark

Configuration conf = new Configuration();

conf.set("fs.AbstractFileSystem.gvfs.impl","com.datastrato.gravitino.filesystem.hadoop.Gvfs");

conf.set("fs.gvfs.impl","com.datastrato.gravitino.filesystem.hadoop.GravitinoVirtualFileSystem");

conf.set("fs.gravitino.server.uri","http://localhost:8090");

conf.set("fs.gravitino.client.metalake","metalake");

Path filesetPath = new Path("gvfs://fileset/fileset_catalog/test_schema/test_fileset_1");

FileSystem fs = filesetPath.getFileSystem(conf);

fs.getFileStatus(filesetPath);

import org.apache.spark.sql.SparkSession

val spark = SparkSession.builder()

.appName("DataFrame Example")

.getOrCreate()

// Assuming the file format is text, we use `text` method to read as a DataFrame

val df = spark.read.text("gvfs://fileset/test_catalog/test_schema/test_fileset_1")

// Show the contents of the DataFrame using `show`

// Setting truncate to false to show the entire content of the row if it is too long

df.show(truncate = false)

// Alternatively, to print the DataFrame contents to the console in a plain format like `foreach(println)`:

df.collect().foreach(println)

// Stop the SparkSession

spark.stop()

Further details can be found in hadoop-catalog and Gravitino Virtual Filesystem.

Messaging data support

Along with the previously mentioned unstructured data,real-time data analytics and processing has now become commonplace in many modern data systems. To facilitate the management of these systems, Gravitino now supports messaging-type data, including Apache Kafka and Kafka-compatible messaging systems. Users can now seamlessly manage their messaging data alongside their other data sources in a unified way using Gravitino. (Issue #2369, further information can be found at kafka-catalog).

The following is an example of how to create a Kafka catalog, more can be found in Kafka catalog.

- Shell

- Java

curl -X POST -H "Accept: application/vnd.gravitino.v1+json" \

-H "Content-Type: application/json" -d '{

"name": "catalog",

"type": "MESSAGING",

"comment": "comment of kafka catalog",

"provider": "kafka",

"properties": {

"bootstrap.servers": "localhost:9092",

}

}' http://localhost:8090/api/metalakes/metalake/catalogs

GravitinoClient gravitinoClient = GravitinoClient

.builder("http://127.0.0.1:8090")

.withMetalake("metalake")

.build();

Map<String, String> properties = ImmutableMap.<String, String>builder()

// You should replace the following with your own Kafka bootstrap servers that Gravitino can connect to.

.put("bootstrap.servers", "localhost:9092")

.build();

Catalog catalog = gravitinoClient.createCatalog(

NameIdentifier.of("metalake", "catalog"),

Type.MESSAGING,

"kafka", // provider, Gravitino only supports "kafka" for now.

"This is a Kafka catalog",

properties);

// ...

Python client support

Python is the #1 most popular AI/ML programming language, hosting some of the most popular machine learning frameworks such as PyTorch, Tensorflow, and Ray. Like a lot of other core infrastructure tools, Gravitino was originally written in Java but now has added a Python client so that users can use our data management features directly from their Python IDE of choice, like Jupyter-notebook. This allows data engineers and machine learning scientists to consume the metadata in Gravitino natively using Python. Note that currently only Fileset type catalogs are supported through the Python client. (Issue #2229).

The following code is a simple example of how to use the Python client to connect with Gravitino.

- Python

provider: str = "hadoop"

// Related NameIdentifer

schema_ident: NameIdentifier = NameIdentifier.of_schema(metalake_name, catalog_name, schema_name)

fileset_ident: NameIdentifier = NameIdentifier.of_fileset(metalake_name, catalog_name, schema_name, fileset_name)

// Init Gravitino client

gravitino_client = GravitinoClient(uri="http://localhost:8090", metalake_name=metalake_name)

// Create catalog

catalog = gravitino_client.create_catalog(

ident=catalog_ident,

type=Catalog.Type.FILESET,

provider=provider,

comment="catalog comment",

properties={}

)

// Create schema

catalog.as_schemas().create_schema(ident=schema_ident, comment="schema comment", properties={})

// Create a fileset

fileset = catalog.as_fileset_catalog().create_fileset(ident=fileset_ident,type=Fileset.Type.MANAGED,comment="comment of fileset",storage_location="file:/tmp/root/schema/local_file",properties={})

Apache Doris support

Tagging onto our Real-Time Analytics support, we are now also supporting Apache Doris in this release. Doris is a high-performance, real-time analytical data warehouse that is known for its speed and ease of use. By adding a Doris catalog, engineers implementing Gravitino will now have more flexibility in their cataloging options for their analytical workloads. (Issue #1339, visit jdbc-doris-catalog for specifics). Related user documents can be found here.

Empowering operations with event listener system

To complement our efforts in enabling real-time, dynamic data infrastructures, Gravitino’s 0.5.0 release now also includes a new Event Listener System for applications to plug into. This new system allows users to track and handle all operational events through use of a hook mechanism for custom events, enhancing capabilities in auditing, real-time monitoring, observability, and integration with other applications. (Issue #2233, detailed at event-listener-configuration). You can refer to the event listener system for more information.

JDBC storage backend

Diversifying its storage options, Gravitino 0.5.0 now supports JDBC backends other than KV storage. This allows the use of popular databases like MySQL or PostgreSQL as the entity store. (Issue #1811, check out storage-configuration for further insights). Users only need to replace the following configurations in the configuration file to use the JDBC storage backend.

- Yaml

gravitino.entity.store = relational

gravitino.entity.store.relational = JDBCBackend

gravitino.entity.store.relational.jdbcUrl = jdbc:mysql://localhost:3306/mydb

gravitino.entity.store.relational.jdbcDriver = com.mysql.cj.jdbc.Driver

gravitino.entity.store.relational.jdbcUser = user

gravitino.entity.store.relational.jdbcPassword = password

Bug fixes and optimizations

Gravitino 0.5.0 also contains many bug fixes and optimizations that enhance overall system stability and performance. These improvements address issues that have been identified by the community through issues and direct feedbacks.

Overall

We show appreciation to the Gravitino community for their continued support and valuable contributions, including feedback and testing. Thanks to the vocal feedback of our users that we are able to innovate and build, so cheers to all those reading this!

To explore Gravitino 0.5.0 release in full, please check the documentation and release notes. Your feedback is invaluable to the community and the project.

Enjoy the Data and AI journey with Gravitino 0.5.0!

Apache®, Apache Doris™, Doris™, Apache Hadoop®, Hadoop®, Apache Kafka®, Kafka®, Apache Spark, Spark™, are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries. Java and MySQL are registered trademarks of Oracle and/or its affiliates. Python is a registered trademark of the Python Software Foundation. PostgreSQL is a registered trademark of the PostgreSQL Community Association of Canada.